Across East Africa, a quiet revolution is underway: the increasing deployment of Artificial Intelligence (AI) in surveillance technologies. From city centres to online spaces, AI is changing how observation and monitoring are conducted, raising important questions about security, privacy, and individual freedoms. In Nairobi, Kampala, and Dar es Salaam, significant AI-powered camera networks, often supplied by Chinese tech giants under agreements with governments, have been established. These systems, sometimes funded with limited public transparency, are capable of facial recognition, behavioural analysis, and real-time data transmission to law enforcement.

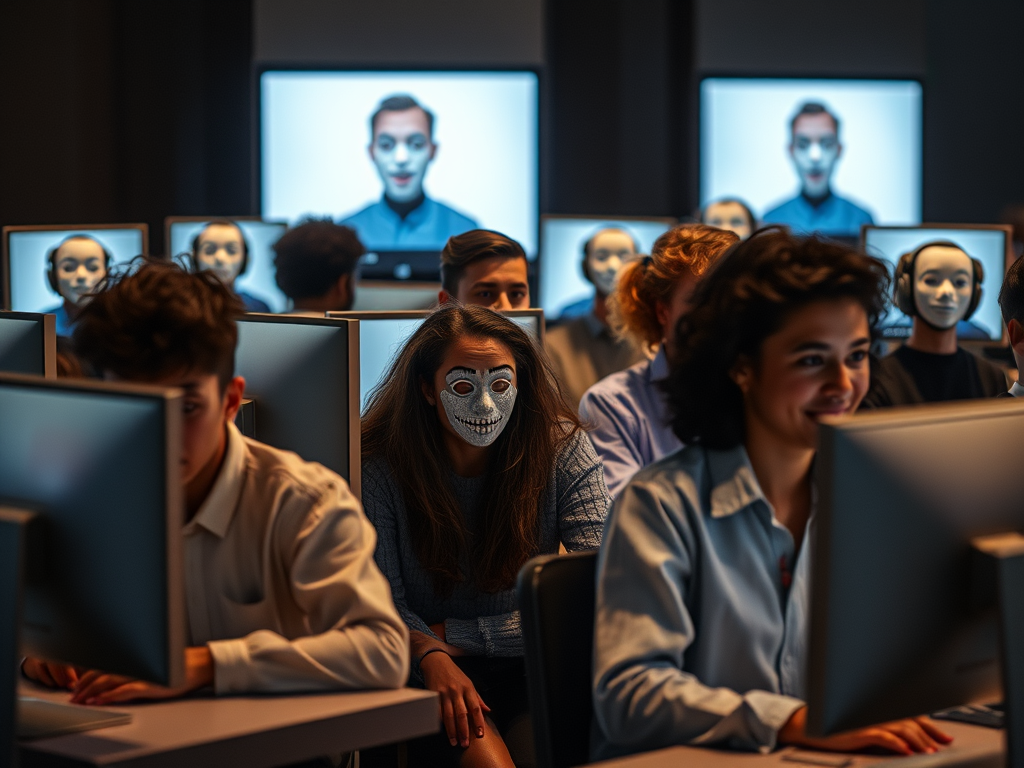

Governments and private entities in the region are investing in sophisticated AI-powered systems. These tools go beyond simple video recording, offering capabilities like automatically identifying individuals in crowds, analysing patterns of behaviour, and even monitoring online communications. The justification often lies in enhancing public safety, combating crime, and ensuring national security. However, reports from human rights organizations and news outlets suggest these systems have been used in ways that raise concerns about the suppression of dissent. For instance, during protests in Kenya, facial recognition technology has allegedly been used to identify and target individuals. Similarly, in Uganda, AI tools reportedly flagged opposition supporters based on their online activity during election periods.

The Technology at Work

AI surveillance operates through complex algorithms that learn from vast amounts of data. Some key technologies being implemented include:

- Facial Recognition: Cameras equipped with AI can scan faces and match them against existing databases, such as national identification records or watchlists. Reports indicate that the accuracy of these systems can vary, particularly with different demographic groups, potentially leading to misidentification. This allows for the real-time identification of individuals in public spaces.

- Behavioural Analysis: AI can be trained to recognize what is considered “normal” behaviour in a given context. Systems in some East African cities have reportedly flagged actions like prolonged loitering or filming in certain areas as “suspicious,” leading to intervention by authorities. Deviations from this norm can trigger alerts, potentially flagging individuals deemed “suspicious.”

- Network Analysis: AI algorithms can analyse communication patterns online, looking for connections and keywords that might indicate threats or dissent. There are concerns that governments in the region are employing technologies to monitor online discussions and even encrypted communications. This can extend to social media activity and private messaging platforms.

- Object Detection: Beyond faces, AI can identify specific objects of interest, such as certain types or models of vehicles, the presence of gatherings, number plates etc. This technology is being used in various urban centres and key infrastructure points across East Africa for monitoring and management.

Concerns and Implications

The rise of AI surveillance in East Africa is accompanied by growing concerns:

- Privacy Erosion: The continuous monitoring of public and digital spaces raises fundamental questions about the right to privacy and the security of personal data. Reports suggest that the data collected by these surveillance systems is not always subject to strict data protection frameworks (for countries with such frameworks).

- Potential for Abuse: There are increasing reports and allegations of AI surveillance tools being used to target activists, journalists, and political opposition, potentially chilling freedom of expression and assembly.

- Lack of Transparency: Information regarding the procurement, deployment, and operation of AI surveillance systems is often opaque, hindering public scrutiny and accountability. Contracts with technology providers are frequently not made public, making it difficult to assess the safeguards in place.

- Bias and Errors: Evidence suggests that the accuracy of facial recognition technology can vary significantly across different ethnicities, raising concerns about potential misidentification and unfair targeting of certain populations.

Citizen Awareness and Response

As these technologies spread, communities are exploring ways to protect their rights and freedoms:

- Digital Security Practices: Individuals and organizations are increasingly adopting tools and practices to enhance their digital security and privacy, such as using encrypted communication apps, cloud storage, user awareness and training sessions, capacity building and so on.

- Counter-Surveillance Measures: Some individuals are experimenting with techniques to limit the effectiveness of facial recognition and other surveillance technologies in public spaces.

- Legal and Advocacy Efforts: Civil society organizations and legal professionals are challenging the legality and ethical implications of widespread AI surveillance through legal action and public advocacy.

Looking Ahead

The landscape of AI surveillance in East Africa is rapidly evolving. The potential integration of new technologies like DNA analysis and advanced drone surveillance raises further ethical and human rights concerns. Without clear legal frameworks, independent oversight, and a commitment to transparency, there is a risk of these powerful tools being used in ways that undermine fundamental rights and freedoms across the region. The ongoing developments in national digital identification systems and data retention policies will also significantly shape the future of surveillance and privacy in East Africa.

Discover more from Jones Baraza

Subscribe to get the latest posts sent to your email.